In order to make reliability improvements tangible, there needs to be a way to quantify and track the reliability of systems and services in a meaningful way. This "reliability score" should indicate at a glance how likely a service is to withstand real-world causes of failure without having to wait for an incident to happen first. Gremlin's Reliability Score feature allows you to do just that.

When you add a service to Gremlin, Gremlin automatically generates a default suite of reliability tests for you to run on it. These tests contribute to a reliability score, which is based on how many reliability tests you've run and how many have passed or failed. This lets you measure the resilience of the service and how much additional reliability work your team may need to put into it. In addition, because Gremlin tracks your score over time, you can easily see how your reliability has improved over time, and quantify the value of your reliability/resiliency work.

But what exactly does this score represent, and how does Gremlin calculate it? In this blog, we pick apart Gremlin's reliability score so you can get the full picture of how it works and how to customize it to your specific requirements.

What is a reliability score?

A reliability score is a calculated value between 0 and 100 that represents how reliable a particular service is. We define a service as a set of functionality provided by one or more systems within an environment, such as an authentication or checkout service, and reliability is how well we can trust that service to withstand turbulent conditions like node failures, exhausted resources, and slow or unavailable dependencies. In other words, the reliability score is based on the output from a suite of tests that measures how well we can depend on a service to remain available.

Each service that you define in Gremlin is assigned a reliability score starting at 0. To increase your score, you'll need to demonstrate that your service can pass various tests. The first set of tests, called Detected Risks, are entirely automated and simply use the Gremlin agent to scan your service's configuration details to highlight reliability risks.

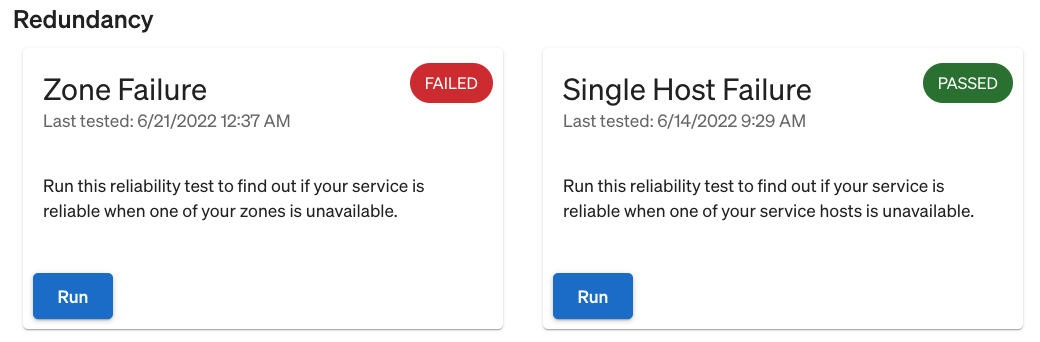

The second set consists of reliability tests that must be intentionally run, such as autoscaling CPU and memory, zone and host redundancy, and dependency failures. Each of these tests a specific behavior of your service while simultaneously monitoring your service's availability via Health Checks, which are metrics that indicate the health and stability of your service. If running a test causes any of your Health Checks to become unhealthy, then the test is marked as a failure. Otherwise, it's marked as passed.

How is the reliability score calculated?

The reliability score is calculated in three steps:

- Each reliability test is given a score between 0 and 100 based on whether your service passed or failed. Detected Risks contribute either 0 or 100 based on whether your service is vulnerable to the risk.

- Tests are grouped into different categories. Each category has a score that's simply the average between the tests in that category.

- The scores from every category are added up and averaged to provide the service's reliability score.

Reliability test score

Each reliability test can have one of four scores depending on whether the test was successful, failed, not yet run, or if it was successful but is outdated (i.e. hasn't been run within the past month). The value of these scores is shown here:

| Test Status | Score |

|---|---|

| Test passed | 100 |

| Test passed, but has not been run in the past week | 75 |

| Test failed | 50 |

| Test not yet run | 0 |

We give 50 points for a failed score because it shows that effort has been taken to check the service's reliability, even though the service failed the test. We also provide a score for tests that passed previously, but haven't been run in the past month. This is to encourage teams to run these tests frequently to ensure services keep passing as new code is deployed, and ideally automate tests on a regular schedule or as part of your CI/CD pipeline. The only way to get a full (i.e. passing) score is to run the reliability test all the way through without any of the service's Health Checks failing, and to keep running the test on a minimum monthly basis.

Category score

Reliability tests are grouped into categories. Each category represents a known, critical reliability risk. Gremlin provides several pre-defined categories:

- Scalability

- Redundancy

- Dependencies

- Detected Risks

- Other

Each category contributes an equal percentage to the total reliability score. This percentage might vary if a Test Suite doesn't use a specific category: for example, if a service has no dependencies, then the Dependencies category won't factor into the score. If a Test Suite uses all five categories, then each category contributes 1/5 of the total score. Within each category, we take the average of your test scores to get the category score. For example, let's say we want to test redundancy. There are two redundancy reliability tests: Zone, and Host. We successfully passed the Host test, but we failed the Zone test.

This means that our category score is 75 ((50 + 100) / 2). This isn't a terrible score, but it does show that we still have more work to do in this category before we can feel confident about this service's redundancy.

Service reliability score

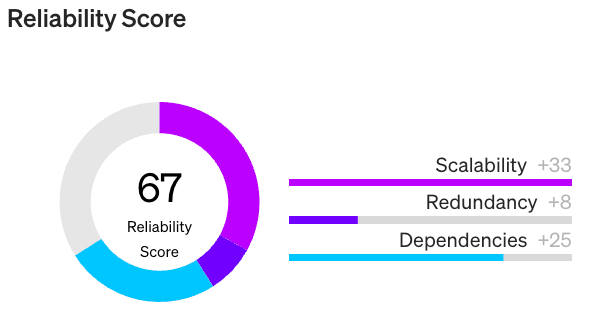

The service reliability score is the sum of each category score out of 100. To get the reliability score, we first need to determine the percentage each category contributes to the total score. We start by dividing 100 by the number of categories. If we have three categories, then each category contributes 33% (100 / 3). Next, for each category, we multiply the maximum contribution by the category score to get its contribution to the reliability score.

For example, we know that our redundancy category score is 75%. We know that each category's contribution is 33, so to get the redundancy category's contribution, we multiply 33 * 0.75, which is 24.75. We then add 24.75 to the reliability score, then repeat this step for each of the other categories.

The end result will look similar to this:

Tracking your reliability score over time

Your reliability score is more than just a point-in-time measure of reliability. Gremlin also tracks your score over time so you can see how the reliability of your service has changed as you continue to test and improve it. This is especially useful for reviewing past test results, determining when you last tested this service, and proving to your manager that you've been putting effort into improving your service's reliability.

What is a good score?

Reliability Scores work a bit like the grades you’d receive in school. You can still get to 50% even if you fail every test. Getting to 100% and keeping it there means you've tested every area and are keeping your scores high by running tests at least monthly. Not every service is equally important to your application's reliability, so you may want to set a goal of 100% on critical services, but set a lower score for less critical services. You might also set lower score requirements for specific test categories (i.e. a service needs to pass Scalability tests, but not Redundancy).

Customizing your score

You can go beyond Gremlin's default score by creating custom Test Suites, as each test suite will have a corresponding reliability score. Test Suites are a collection of reliability tests that we can assign to individual teams to define and enforce a reliability standard for that team. For example, one team might have stricter requirements for redundancy, while another team might focus more on dependency testing. Test Suites lets us fine-tune our testing practices for individual teams. That way, the score becomes much more effective at indicating how well each team is performing against its own standards.

You can learn how to define a custom test suite in Gremlin by following this tutorial.

Using the score in practice

Being able to proactively test and measure reliability before an incident is valuable on its own, but how can we drive even greater business results across the organization?

As a first step, we can use the score to manage teams more effectively by setting reliability standards that align to organizational best practices, known past failures, or compliance requirements. We can see how teams score relative to each other, monitor for changes in scores, identify risks, and think about improvements we can make. Teams now have a positive reliability metric that they can use to proactively plan improvements. Contrast this with the retrospective meetings most teams run after incidents.

The next step is to automate. Once we begin passing tests, we can schedule them to ensure our services are continuously validated as systems change. We could also use the Gremlin API to run tests from a CI/CD platform or another tool. This reduces the toil that teams must put into reliability testing, making them more efficient.

Third, we can use our service reliability score to prevent unreliable code from reaching production by tying into our CI/CD pipeline. You can imagine if we had a condition in our CI/CD pipeline where we wouldn’t deploy new changes unless our reliability score was >90. If bad code causes one or more of our reliability tests to fail in staging, we can automatically prevent the problem from reaching customers and remind our teams to fix reliability issues before deploying.

Lastly, we can go beyond Gremlin's default tests by creating custom Test Suites. Test Suites are a collection of reliability tests that we can assign to individual Gremlin teams. For example, one team might have stricter requirements for redundancy, while another team might focus more on dependency testing. Test Suites lets us fine-tune our testing practices for individual teams. That way, the score becomes much more effective at indicating how well each team is performing against its own standards.

There’s a lot you can do with reliability scores to operationalize reliability; these are just a few of the most common ways we see this being adopted at scale.

Conclusion

Translating something as complex as reliability into a numerical score isn't always clear cut. A score of 90 doesn't mean that your service will only work 90% of the time. Instead, it represents how well your service stands up to the different failure scenarios that you've put it through, and how it compares to other services across your organization. It also shows the amount of effort that you and your teams have put into making your services as resilient as possible, and how your reliability posture is changing over time.

In short, having a consistent suite of reliability tests and a numeric measure of your services' results from those tests is key to improving reliability across any organization.

To see how reliability scoring would work in your environment, sign up for a free trial of Gremlin.